In my master thesis, I explored caching the outputs of material graphs in a real-time path tracer to accelerate rendering of scenes with many complex material graphs. Below is a summary of the thesis as well as a download link for the full thesis. I wrote the thesis at the Institut für Visualisierung und Datenanalyse of the KIT with help from my advisor Vincent Schüßler. It was submitted on May 22, 2023.

Motivation

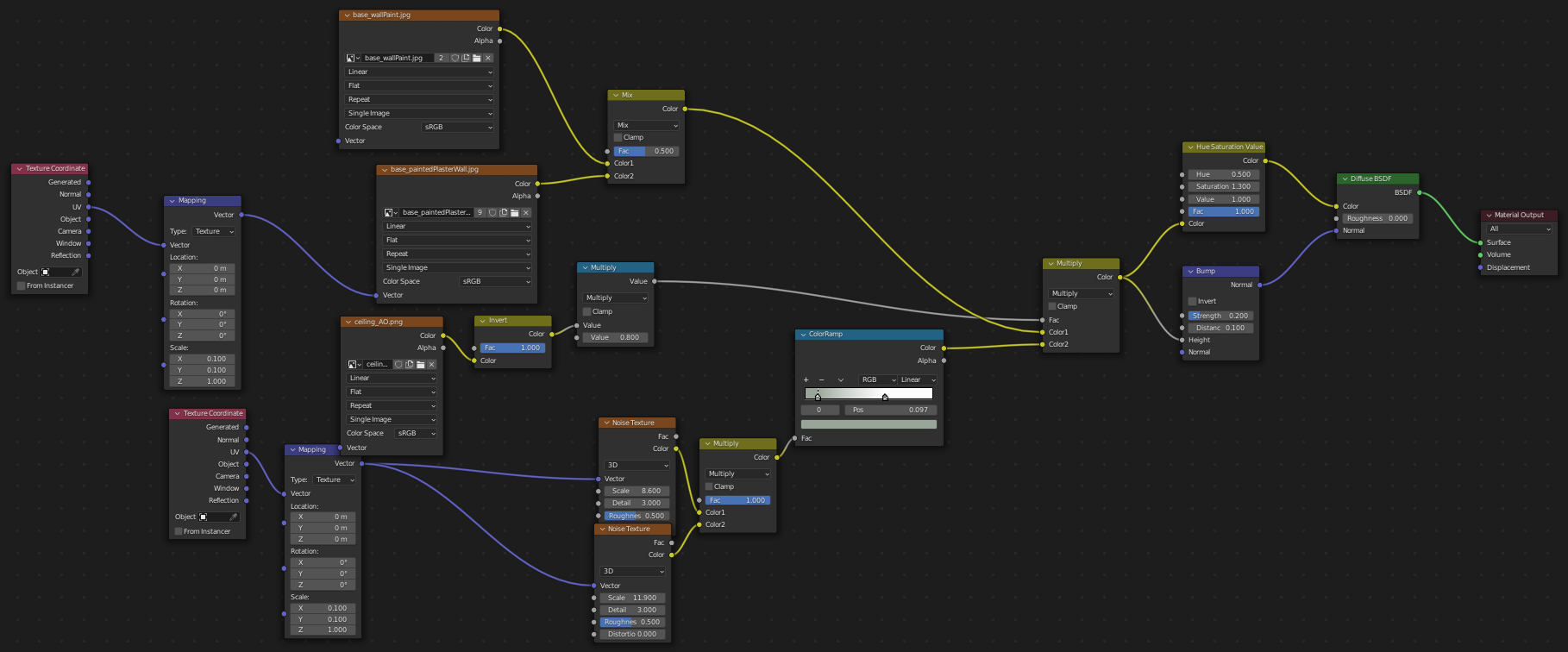

Material graphs provide an artist-friendly and highly flexible system for modelling the look of surfaces in a 3D scene. They can sample and blend multiple textures, evaluate procedural textures, distort texture coordinates and apply many other interesting effects. They are accessible even without prior programming experience because of their intuitive visual representation and because they strictly separate artistic material description from more technical aspects of shader development. To use material graphs in a path tracer, material-specific code must be evaluated at each ray hit. This is a major performance problem because many material graphs must be evaluated per pixel and frame and because the evaluation is highly divergent. GPUs use a great deal of SIMD parallelism (single instruction, multiple data) to achieve the throughput that is necessary for real-time path tracing. This means that groups of 32 or 64 threads (waves/subgroups/warps) must execute the same code paths at the same time. If threads take different branches, e.g., to evaluate different material graphs, they have to wait for each other instead of doing useful work. Caching the outputs of a material graph in an appropriate data structure and reusing them for later ray hits improves the performance significantly. Nearly all points visible in one frame (via direct or indirect hits) were already visible and have already been calculated in previous frames.

Material Graph Memoization on the GPU

The memoization pattern is a very general approach for improving the performance of certain functions. When a function is evaluated, its outputs are stored in a data structure indexed by the function parameters. When the function is called again with the same parameters, the data structure is queried for the results instead of evaluating the function again. Progressive Material Caching applies this pattern to an offline path tracer to speed up the rendering of individual images. Outputs of material graph nodes are memoized so that they can be reused by other paths that hit the same surface point. The cache data structure is a set of sparse, mipmapped, virtual textures stored in a hash table. The mipmap level is determined via ray cones. In my thesis, I use a similar approach for a real-time path tracer. A key difference to Progressive Material Caching is that I use the same cache for all frames of a real-time application. Once the cache is full, I evict (i.e. overwrite) cache entries based on an eviction strategy. I implemented random eviction, least recently written and least recently used. Eviction ensures that the data stored in the cache stays relevant as the camera moves through a potentially large 3D scene. Another difference is that I cache all outputs of a material graph in a single cache entry, instead of outputs of specific nodes in separate entries. This requires a more complex way of managing concurrent access of cache entries because a cache entry is bigger than what can be read or written with an atomic operation. Before writing a cache entry, I lock it with an atomic operation to ensure that no other thread writes it simultaneously. I do not lock cache entries for reading but instead use the heuristic of checking the key before and after reading the values. If it is the correct key in both cases, I assume that it has not been modified. This is fast, allows multiple threads to read simultaneously, and is reliable enough to not produce visible artifacts.

Tiled Cache

Additionally to the approach described above, I also developed a variant of the memoization system that stores texels in small tiles instead of individually. Especially for primary hits but also for certain secondary hits, ray hits of nearby pixels are likely to be nearby in texture space as well. In the tiled variant this means that they are likely to be on the same tile. This has two advantages. First, branching by cache hit and cache miss is less divergent because either the entire tile is cached or not. Second, hardware caches (which are independent from my software cache) are used more efficiently because the texels of a tile are stored compactly in memory. Another advantage of the tiled variant is that it supports bilinear filtering of cache entries. By overlapping tiles by one texel, I ensure that only texels from a single tile must be read for each bilinear sample. An important question is how missing tiles that were not already in the cache can be computed efficiently. If the thread that had a cache miss had to calculate the entire tile (i.e. evaluate the material graph 16 times for a 4x4 tile), the other threads in the wave would be stalled for a long time. Instead, I use wave operations to parallelize the calculation of tiles across the wave. For example, the 32 lanes of one wave on my RTX 4070 Ti can compute two 4x4 tiles in one iteration where each lane calculates only a single texel. My system supports any tile size that is smaller than the wave size and automatically schedules as many tiles per iteration as possible.

Results

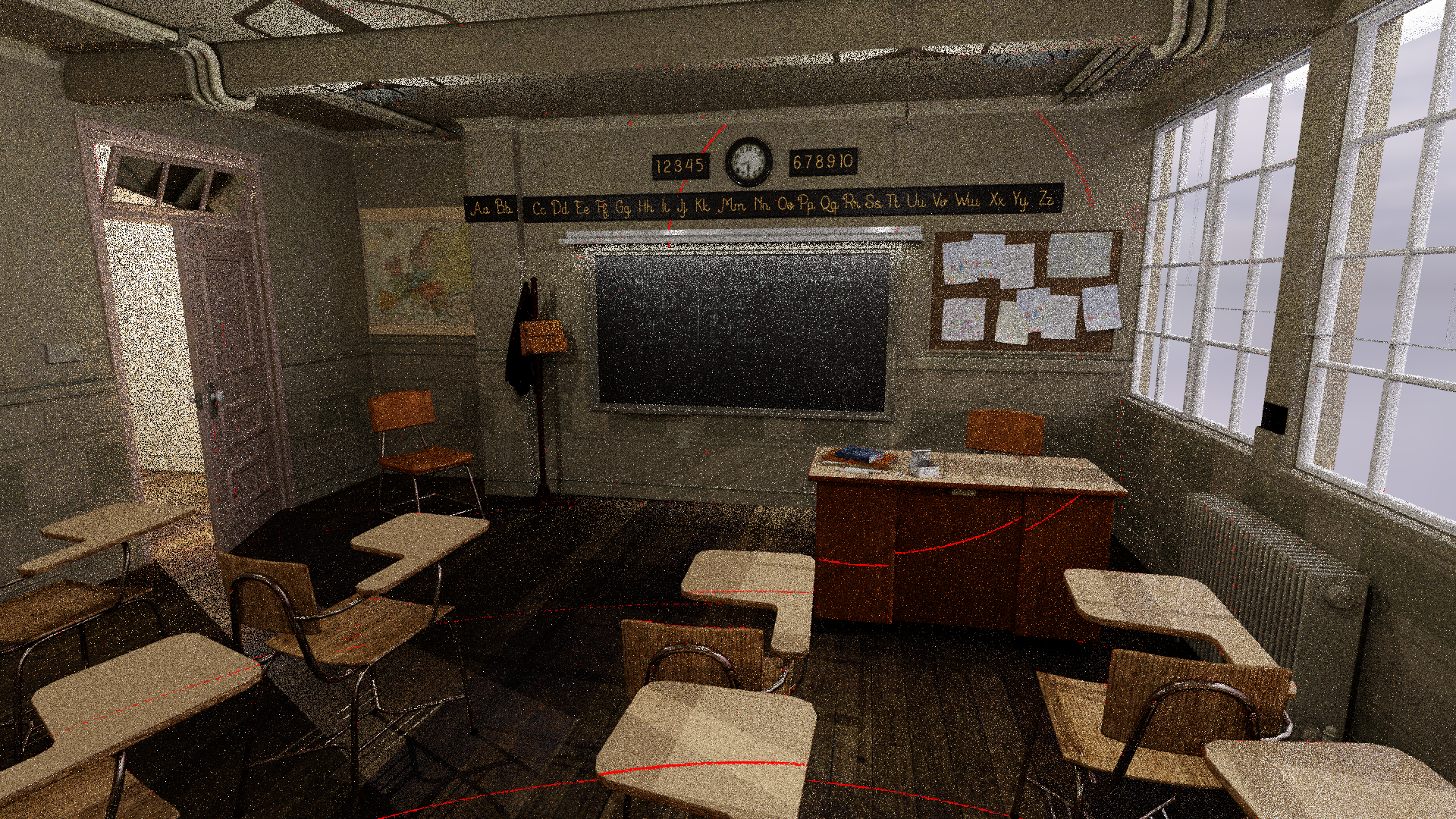

Material graph memoization is extremely effective in small scenes with many different and complex materials. It is less effective for large, open scenes and scenes with a small number of simple material graphs. Under the right conditions, I achieved hit rates of up to 99.98% and speedups of up to 680%. The tiled cache performs slightly better than the non-tiled one. Because the virtual textures are effectively a resampling step, the memoization system causes a slight loss of image quality. However, this is not very noticeable and can be eliminated almost entirely by caching only secondary hits. Caching only secondary hits results in very similar performance because evaluation of primary hits is rarely divergent and therefore relatively efficient even without memoization. I recommend the least recently used eviction strategy but all three strategies perform well. The image below shows a screenshot of the Blender example scene Classroom by Christophe Seux in my path tracer. Only red pixels had any cache miss in any path traced for the current frame. Cache misses are mainly due to new texels becoming visible as a result of camera motion.